Submitted by Staci R Norman on

Every day—every minute, every second—the world’s computers are amassing visual information at an extraordinary rate. Aspiring Tarantinos are sending their two-minute videos to Youtube in the hopes of going viral. Mom and Dad are uploading their Napa Valley vacation photos to Flickr. Doctors are sending patient MRIs to medical databases, and satellites are scanning the earth for evidence of sinister activity.

Dr. Kristen Grauman works at the intersection of

Dr. Kristen Grauman works at the intersection of

machine learning and computer vision.And every day people like Kristen Grauman are searching for ways to help computers sift through this avalanche of visual information so that someday our computers might be as good at analyzing visual data as they are, right now, at dealing with textual data.

“It’s a young and very unsolved area, so there’s a lot of room for big improvements,” says Grauman, an assistant professor of computer science. “We can get much better with video surveillance, medical image analysis, face recognition, autonomous robotics. As we move forward, we will find new ways to search large repositories of images and video, to connect to information based on visual cues.

“Imagine you’re out hiking and you see some unfamiliar plant or animal you want to learn about. You just take a picture with your mobile device and it connects you right to information based purely on what it looks like. Automatic annotation will be powerful on these web applications like Flickr, when we can index images by visual content. There will be more summarization of video based on the visual content, so if I have a massive collection of video, the system can automatically summarize what’s happening and can search for content that’s relevant.”

Among the kinds of advances that have already been made by the field, says Grauman, are programs that can learn to recognize common objects in such a way that they can pick out different instances of those objects in a wide variety of settings, sizes and orientations.

Given a unique object—the Eiffel Tower, say, or the U.S. Capitol—such programs can be nearly as good at finding matches as a human being.

The more regular the texture and the layout of the objects, she says, the more robustly detectable they’re likely to be, with common categories like faces, pedestrians, and cars being particularly well suited to automated identification.

“You start with a bucket of images, and you run filters,” says Grauman. “You find out where the edges and contours are. You extract vectored descriptions of color, texture, and shape. You’re looking for themes in the data, local patches of description that are repeated, geometries that match. Given these representations, we can employ machine learning algorithms to extract models to distinguish between different categories. Over time, as the algorithm receives more human supervision in the form of labeled examples, the descriptions of objects that are encoded in the programs get better and better.”

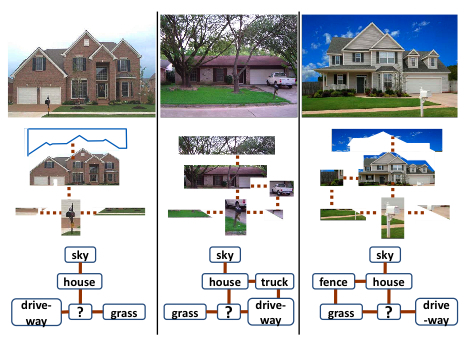

Instead of just giving a program a batch of millions of images and

Instead of just giving a program a batch of millions of images and

asking it to look for visual patterns and themes that recur in different

images, Grauman and her graduate students give their program the

specs for things it already knows—a house, say, and a driveway—

and asks it to look for patterns that seem to cluster around that thing.Considerable strides have been made as well, says Grauman, in the area of face recognition and detection—which is why, for instance, national security agencies can quickly scan through thousands of hours of security camera footage, and tens of thousands of faces, for suspected criminals or terrorists.

The resources that have gone into creating good face recognition software, however, and the limitations of even the best programs, highlight the great distance the field has left to progress.

“The reality is that the best face detectors are the ones that have been training, with significant human interaction, on millions of examples,” says Grauman. “You can’t dedicate that kind of effort to training software for every one of the 30,000 categories of common objects that you and I can name immediately.”

One of the big challenges, then, is how to teach programs to analyze visual content when you can’t depend on the extraordinary human energies that have been devoted (for obvious reasons) to face recognition.

This is where Grauman’s research comes in. In one recent project, for instance, Grauman and her graduate students looked to see whether they could leverage what’s already known about certain categories of objects to prime a program to identify new, previously unclassified objects.

Instead of just giving a program a batch of millions of images and asking it to look for visual patterns and themes that recur in different images, Grauman gives the program the specs for something it already knows—a car, say—and asks it to look for patterns that seem to cluster around that thing. Once these patterns near the car are identified, a human can look at them and, very quickly, categorize them as “road” or “streetlight” or “building.” Then the program can start again, with an expanded repertoire of known objects, searching for new objects.

“The idea,” says Grauman, “is don’t do it from scratch and don’t ignore what you already know. Previous approaches to unsupervised visual discovery attempted to find new categories based only on what the things looked like, and often that’s just too much to ask. It’s more reasonable to ask the system to discover these new things in the context of the old ones with which it’s already familiar.”

Such a program, says Grauman, lies toward the less supervised end of the machine learning spectrum. It’s mostly on its own, searching through images and identifying patterns. Grauman is also working, however, to improve the accuracy and efficiency of programs that rely on more human input.

Another one of Grauman’s projects relies on an initial effort from human annotators, who look at photos and provide a list of the some of the objects in each image, in order to boost the speed and efficacy of visual detection programs.

“It turns out we look at images and notice things in a certain way,” says Grauman, “and there turns out to be implicit information in the lists of words that the humans didn’t even know they were providing. If you list a word first, for instance, that often suggests that it’s fairly prominent in the picture. Objects that are close to each other on the list are more likely to be close to each other in the image. We have shown that by letting the system learn these implicit cues, it can provide faster and more accurate object detection on novel images.”

Over the long haul, says Grauman, it’s this kind of fusion—of human and computers, and of visual, textual and audio data streams—that may give rise to the most powerful methods of searching, organizing and analyzing the digital world.

Seeing Anew | By: Daniel Oppenheimer | Posted: Wednesday, August 4th, 2010 | CNS News